-

Higher Performance and Larger, Faster Memory

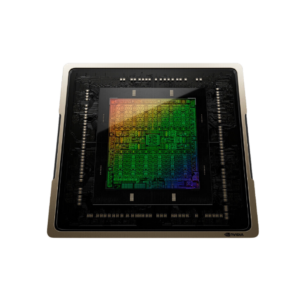

The NVIDIA H200 Tensor Core GPU supercharges generative AI and high-performance computing (HPC) workloads with game changing performance and memory capabilities.

Based on the NVIDIA Hopper™ architecture, the NVIDIA H200 is the first GPU to offer 141 gigabytes (GB) of HBM3e memory at 4.8 terabytes per second (TB/s)—that’s nearly double the capacity of the NVIDIA H100 Tensor Core GPU with 1.4X more memor bandwidth. The H200’s larger and faster memory accelerates generative AI and large language models, while advancing scientific computing for HPC workloads with better energy efficiency and lower total cost of ownership.

Nvidia H200 Tensor Core SXM1 141GB 700W

$30,400.00

✔ Form Factor: H200 SXM1

✔ FP64: 34 TFLOPS

✔ FP64 Tensor Core: 67 TFLOPS

✔ FP32: 67 TFLOPS

✔ TF32 Tensor Core: 989 TFLOPS

✔ BFLOAT16 Tensor Core: 1,979 TFLOPS

✔ FP16 Tensor Core: 1,979 TFLOPS

✔ FP8 Tensor Core: 3,958 TFLOPS

✔ INT8 Tensor Core: 3,958 TFLOPS

✔ GPU Memory: 141GB

✔ GPU Memory Bandwidth: 4.8TB/s

✔ Decoders: 7 NVDEC, 7 JPEG

✔ Max Thermal Design Power (TDP): Up to 700W (configurable)

✔ Multi-Instance GPUs: Up to 7 MIGs @16.5GB each

✔ Interconnect: NVIDIA NVLink®: > 900GB/s, PCIe Gen5: 128GB/s

✔ Server Options: NVIDIA HGX™ H200 partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs, NVIDIA AI Enterprise Add-on

✔ Warranty: 3 years return-to-base repair or replace

This is a preorder for the September production batch, with tentative delivery in October, 2024. All sales final. No returns or cancellations. For bulk inquiries, consult a live chat agent or call our toll-free number.

| Technical Specifications | Values |

|---|---|

| Form Factor | H200 SXM1 |

| FP64 | 34 TFLOPS |

| FP64 Tensor Core | 67 TFLOPS |

| FP32 | 67 TFLOPS |

| TF32 Tensor Core | 989 TFLOPS |

| BFLOAT16 Tensor Core | 1,979 TFLOPS |

| FP16 Tensor Core | 1,979 TFLOPS |

| FP8 Tensor Core | 3,958 TFLOPS |

| INT8 Tensor Core | 3,958 TFLOPS |

| GPU Memory | 141GB |

| GPU Memory Bandwidth | 4.8TB/s |

| Decoders | 7 NVDEC, 7 JPEG |

| Max Thermal Design Power (TDP) | Up to 700W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGs @16.5GB each |

| Interconnect | NVIDIA NVLink®: > 900GB/s, PCIe Gen5: 128GB/s |

| Server Options | NVIDIA HGX™ H200 partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs, NVIDIA AI Enterprise Add-on |

Download

Only logged in customers who have purchased this product may leave a review.

Reviews

There are no reviews yet.