Powering the Next Generation of AI

Artificial intelligence is transforming almost every business by automating tasks, enhancing customer service, generating insights, and enabling innovation. It’s no longer a futuristic concept but a reality that’s fundamentally reshaping how businesses operate. However, as AI workloads continue to develop, they’re beginning to require significantly more compute capacity than most enterprises have available. To leverage AI, enterprises need high-performance computing, storage, and networking capabilities that are secure, reliable, and efficient.

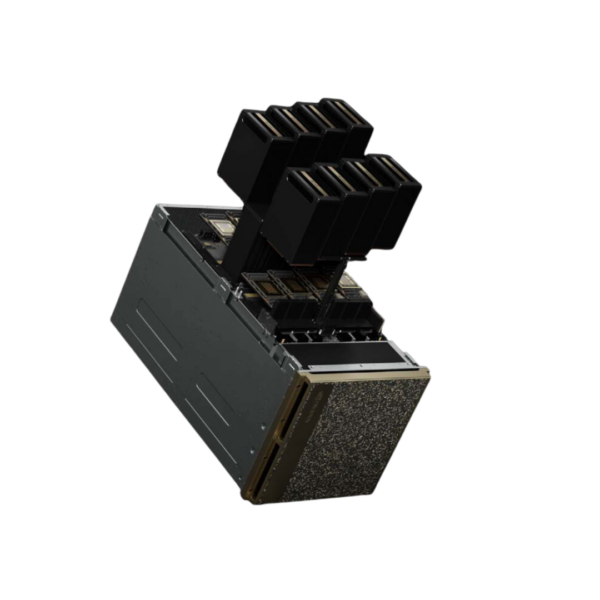

Enter NVIDIA DGX™ B200, the latest addition to the NVIDIA DGX platform. This unified AI platform defines the next chapter of generative AI by taking full advantage of NVIDIA Blackwell GPUs and high-speed interconnects. Configured with eight Blackwell GPUs, DGX B200 delivers unparalleled generative AI performance with a massive 1.4 terabytes (TB) of GPU memory and 64 terabytes per second (TB/s) of memory bandwidth, making it uniquely suited to handle any enterprise AI workload.

With NVIDIA DGX B200, enterprises can equip their data scientists and developers with a universal AI supercomputer to accelerate their time to insight and fully realize the benefits of AI for their businesses.

NVIDIA DGX B200 Blackwell 1,440GB 4TB AI Supercomputer

✓ GPU: 8x NVIDIA Blackwell GPUs

✓ GPU Memory: 1,440GB total

✓ Performance: 72 petaFLOPS for training, 144 petaFLOPS for inference

✓ NVIDIA® NVSwitch™: 2x

✓ System Power Usage: Approximately 14.3kW max

✓ CPU: 2 Intel® Xeon® Platinum 8570 Processors, 112 Cores total, 2.1 GHz (Base), 4 GHz (Max Boost)

✓ System Memory: Up to 4TB

✓ Networking:

4x OSFP ports for NVIDIA ConnectX-7 VPI, up to 400Gb/s InfiniBand/Ethernet

2x dual-port QSFP112 NVIDIA BlueField-3 DPU, up to 400Gb/s InfiniBand/Ethernet

✓ Management Network: 10Gb/s onboard NIC with RJ45, 100Gb/s dual-port ethernet NIC, Host BMC with RJ45

✓ Storage:

OS: 2x 1.9TB NVMe M.2

Internal: 8x 3.84TB NVMe U.2

✓ Software: NVIDIA AI Enterprise, NVIDIA Base Command, DGX OS / Ubuntu

✓ Rack Units (RU): 10 RU

✓ System Dimensions: Height: 17.5in, Width: 19.0in, Length: 35.3in

✓ Operating Temperature: 5–30°C (41–86°F)

Q4 2024 RELEASE

| Specification | Detail |

|---|---|

| GPU | 8x NVIDIA Blackwell GPUs |

| GPU Memory | 1,440GB total |

| Performance | 72 petaFLOPS (PFLOPS) training, 144 PFLOPS inference |

| NVIDIA® NVSwitch™ | 2x |

| System Power Usage | ~14.3kW max |

| CPU | 2 Intel® Xeon® Platinum 8570 Processors, 112 Cores total, 2.1 GHz (Base), 4 GHz (Max Boost) |

| System Memory | Up to 4TB |

| Networking | 4x OSFP ports for NVIDIA ConnectX-7 VPI, up to 400Gb/s InfiniBand/Ethernet |

| 2x dual-port QSFP112 NVIDIA BlueField-3 DPU, up to 400Gb/s InfiniBand/Ethernet | |

| Management Network | 10Gb/s onboard NIC with RJ45, 100Gb/s dual-port ethernet NIC, Host BMC with RJ45 |

| Storage | OS: 2x 1.9TB NVMe M.2, Internal: 8x 3.84TB NVMe U.2 |

| Software | NVIDIA AI Enterprise, NVIDIA Base Command, DGX OS / Ubuntu |

| Rack Units (RU) | 10 RU |

| System Dimensions | Height: 17.5in (444mm), Width: 19.0in (482.2mm), Length: 35.3in (897.1mm) |

| Operating Temperature | 5–30°C (41–86°F) |

Only logged in customers who have purchased this product may leave a review.

Reviews

There are no reviews yet.