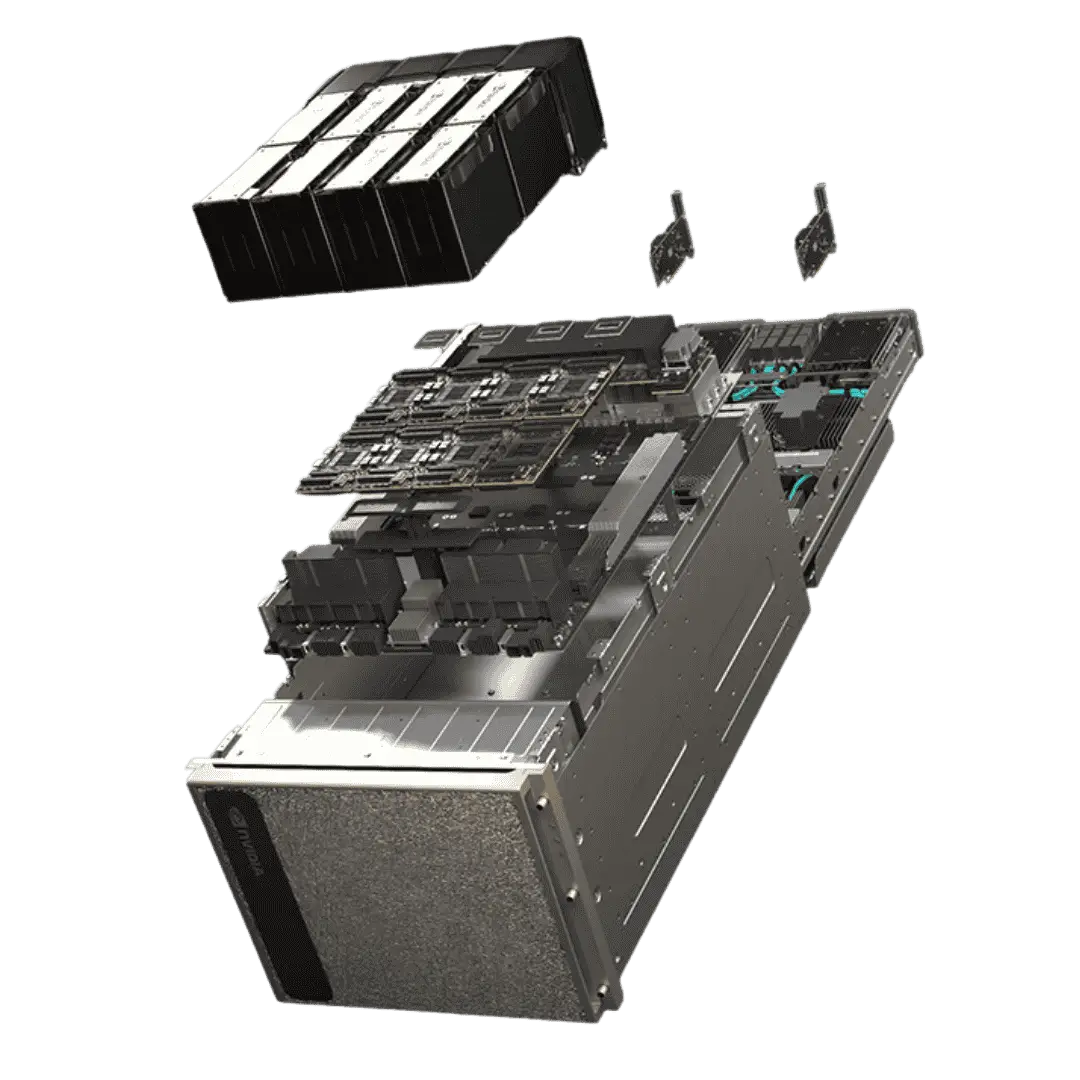

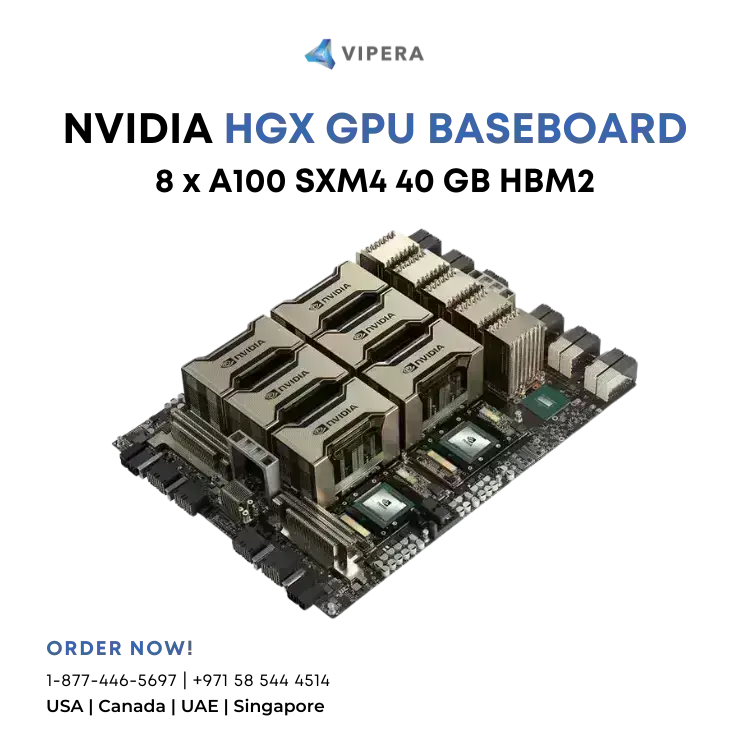

✓ Equipped with 8x NVIDIA H100 Tensor Core GPUs SXM5

✓ GPU memory totals 640GB

✓ Achieves 32 petaFLOPS FP8 performance

✓ Incorporates 4x NVIDIA® NVSwitch™ Link

✓ System power usage peaks at ~10.2kW

✓ Employs Dual 56-core 4th Gen Intel® Xeon® Scalable processors

✓ Provides 2TB of system memory

✓ Offers robust networking, including 4x OSFP ports, NVIDIA ConnectX-7 VPI, and options for 400 Gb/s InfiniBand or 200 Gb/s Ethernet

✓ Features 10 Gb/s onboard NIC with RJ45 for management network, with options for a 50 Gb/s Ethernet NIC

✓ Storage includes 2x 1.9TB NVMe M.2 for OS and 8x 3.84TB NVMe U.2 for internal storage

✓ Comes pre-loaded with NVIDIA AI Enterprise software suite, NVIDIA Base Command, and choice of Ubuntu, Red Hat Enterprise Linux, or CentOS operating systems

✓ Operates within a temperature range of 5–30°C (41–86°F)

✓ 3 year manufacturer parts or replacement warranty included (return-to-base only)