In the current tech landscape, software capable of crafting text or visuals indistinguishable from human work has sparked an intense competition. Major corporations such as Microsoft and Google are in a fierce battle to embed state-of-the-art AI into their search engines. This race also sees giants like OpenAI and Stable Diffusion leading the pack by making their groundbreaking software accessible to the masses.

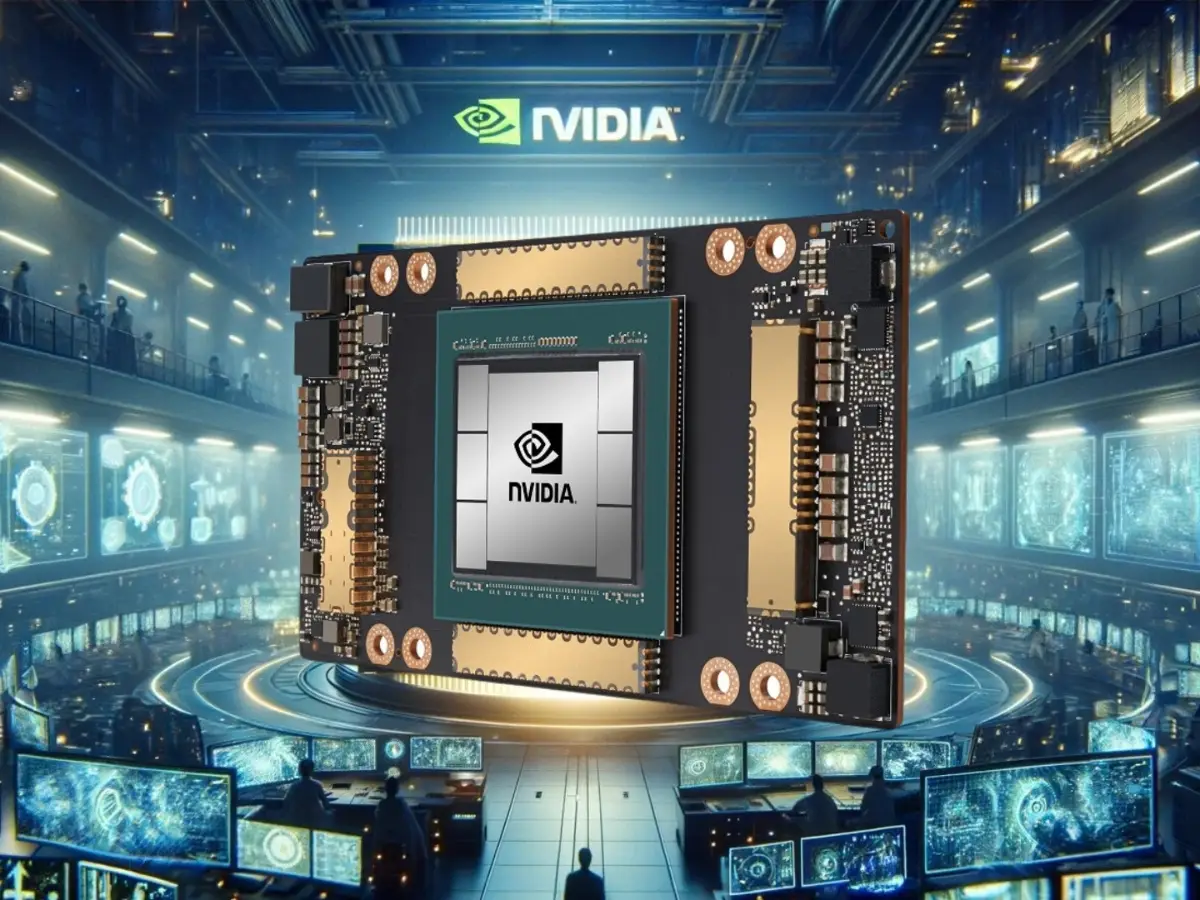

Nvidia's A100: The $10,000 Engine Behind AI Innovation

Central to numerous AI applications is the Nvidia A100, a chip priced around $10,000, which has emerged as a pivotal instrument in the artificial intelligence sector. Nathan Benaich, an investor renowned for his insights into the AI field through newsletters and reports, highlights the A100 as the current linchpin for AI experts. With Nvidia dominating the market for graphics processors suitable for machine learning tasks with a staggering 95% share, according to New Street Research, the significance of the A100 chip cannot be overstated.

The Workhorse of Modern AI

The A100 chip by Nvidia is not just another component; it is the backbone of today’s AI advancements. Its architecture, initially designed for rendering complex 3D graphics in video games, now primarily serves the rigorous demands of machine learning. This transformation has positioned the A100 as a critical resource for executing numerous simultaneous simple computations, a key feature for training and operating neural network models.

From Gaming to AI: The Evolution of Nvidia's Technology

Originally developed for gaming graphics, Nvidia’s A100 has transitioned into a powerhouse for machine learning. While it shares its roots with gaming GPUs, its current deployment is far from the gaming realm, operating instead within the heart of data centers. This shift underscores Nvidia’s strategic repositioning towards AI, catering to the needs of both large corporations and startups. These entities rely heavily on Nvidia’s technology, acquiring hundreds or thousands of chips, either directly or through cloud providers, to fuel their innovative AI-driven applications.

The Engine Behind AI Innovation: Nvidia's $10,000 Marvel

In the realm of artificial intelligence, a new era is unfolding, driven by software capable of crafting text or creating images that appear indistinguishably human-made. This technological leap has sparked a competitive frenzy among giants like Microsoft and Google, all vying to embed the most advanced AI into their offerings. This race also features behemoths such as OpenAI and Stable Diffusion, who are rapidly deploying their innovations to the masses. At the heart of this technological surge lies a pivotal tool priced around $10,000—the Nvidia A100 chip, now indispensable in the AI industry

The Nvidia A100: An AI Powerhouse

The A100 chip by Nvidia has quickly risen to prominence, earning the title of the go-to processor for AI specialists. As Nathan Benaich, a notable investor and AI industry analyst, highlights, the A100 not only dominates the graphics processor market with a staggering 95% share according to New Street Research but is also the prime choice for running sophisticated machine learning models. Renowned applications like ChatGPT, Bing AI, and Stable Diffusion rely on its capability to execute multiple calculations at once—a critical feature for training and deploying neural networks.

Originally conceived for rendering complex 3D graphics in video games, the A100 has evolved far beyond its gaming roots. Today, Nvidia has tailored it specifically for machine learning tasks, making it a mainstay in data centers rather than gaming setups. This shift underscores the growing demand from both established companies and startups in the AI sector, who require vast quantities of these GPUs to develop next-generation chatbots and image generators.

The Backbone of Artificial Intelligence Development

Training large-scale AI models necessitates the use of hundreds, if not thousands, of powerful GPUs like the A100. These chips are tasked with processing terabytes of data swiftly to discern patterns, a step critical for both training the AI and its subsequent “inference” phase. During inference, the AI model is put to work—generating text, making predictions, or recognizing objects in images.

For AI ventures, securing a substantial inventory of A100 GPUs has become a benchmark of progress and ambition. Emad Mostaque, CEO of Stability AI, vividly illustrated this ethos by sharing their exponential growth from 32 to over 5,400 A100 units. Stability AI, the creator behind the eye-catching Stable Diffusion image generator, underscores the aggressive scaling and innovation happening in the industry, fueled by the raw power of Nvidia’s chips.

Nvidia's Ascendancy in the AI Boom

Nvidia’s AI chip division, specifically its data center business, saw a remarkable 11% growth, translating to more than $3.6 billion in revenue. This performance has not only buoyed Nvidia’s stock by 65% but also highlighted the company’s strategic pivot towards AI as articulated by CEO Jensen Huang.

Beyond Burst Computing

Machine learning tasks represent a significant departure from traditional computing tasks, such as serving webpages, which only require intermittent bursts of processing power. In stark contrast, AI applications can monopolize an entire computer’s resources for extended periods, ranging from hours to days.

Nvidia's High-End Solutions

The financial outlay for these GPUs is substantial. Beyond individual A100 chips, many data centers opt for Nvidia’s DGX A100 system, which bundles eight A100 GPUs to enhance computational synergy. This powerhouse setup is listed at nearly $200,000.

Training and Inference Costs

Training AI models is an equally resource-intensive endeavor. The latest iteration of Stable Diffusion, for instance, was developed over 200,000 compute hours using 256 A100 GPUs. This level of investment underscores the substantial costs tied to both training and deploying AI models.

Emerging Rivals and the Future of AI Hardware

While Nvidia currently dominates the AI hardware landscape, it faces competition from other tech giants like AMD and Intel, alongside cloud providers such as Google and Amazon, who are all developing AI-specific chips. Despite this, Nvidia’s influence remains pervasive, as evidenced by the widespread use of its chips in AI research and development.

Moreover, the A100’s significance is underscored by U.S. government restrictions on its export to certain countries, highlighting its strategic importance.